I will be on vacation from October 16th through the 23rd in Toronto, and giving a lecture at Seneca on the 20th. If you are in the area and want to hang out, feel free to give me a buzz.

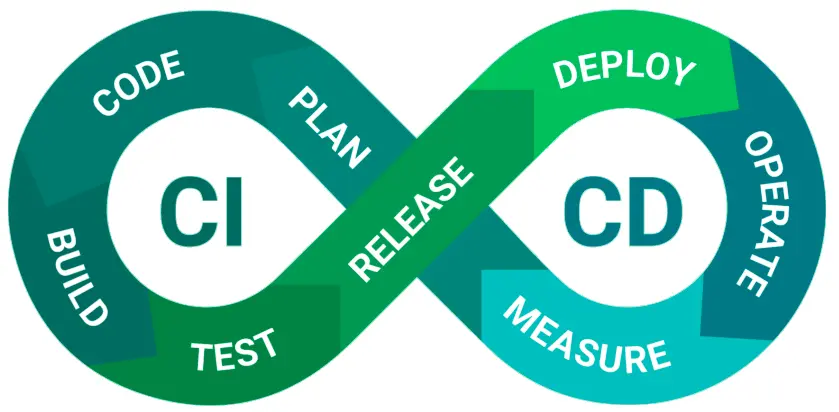

The release automation work is at a reasonably happy place, I have managed to write and test the following steps, and post patches for review:

- Tag

- Build

- Source

- Repack

- Updates

The Stage step is actually mostly tested, but I keep running out of disk space on the staging machine, so I’ll need to get creative on that one. Sign is fairly simple and mostly manual (which is desirable), and Release fairly simple (but obviously critical!) - it’s the act of copying the staged/signed bits to the official release directories.

One thing I feel I must mention is that this tool does not necessarily support what we consider the ideal process - it instead supports the process that we use, and that is known to work.

However, it is difficult to introduce benefitial changes and explore alternatives since we haven’t had a good staging environment or set of verification tests to make sure that we haven’t introduced any undesired side-effects.

The trickiest bit of this isn’t so much the steps themselves as having some kind of automated verification that the step succeeded so we can trust that running the next won’t be a waste of time.

Our current process is very human-time-intensive, since a release engineer needs to kick off and verify each step, and some of the steps take several hours by themselves (builds and update generation/verification, primarily). If something goes wrong (due to an unexpected change in the product, a bug in one of the tools, or just Murphy’s Law) then we need to determine the last “good” step and restart from there.

Automated verification does of course have a point of diminishing returns, and Mozilla-based products are complicated enough that this doesn’t really provide any direct QA benefit, besides not wasting our tester’s valuable time on something a dumb computer can catch (like a bad tag, bad build, mismatched or nonexistant update paths, etc.).

The other big downside to a human operator being the default is that humans function much better with sleep and time off (prolonged focus being bad for overall concentration) and it’s a bad use of creative energies. An automated process doesn’t need to pause between steps, and won’t introduce variation through attempts at creativity. The place to be creative isn’t in the scope of a release, but in thinking about and improving the overall process (generally best done in between releases, based on the lessons learned from the past).

It should of course be possible for a human to jump in and drive the process if needed, especially fixing and rerunning steps which failed for an intermittent reason, bug in the tools, etc. It should hopefully not be the norm, but it’s a reasonable use case for this kind of tool.

The ideal use case that I can think of right now would be: “code is frozen; declare and obtain sign-off for names/numbers/locales/etc. and kick off the release process”. Respinning to pick up source changes is acheived by a variant of the Tag step, and the process is restarted and runs through the same Build->Stage steps until we’re happy with it.